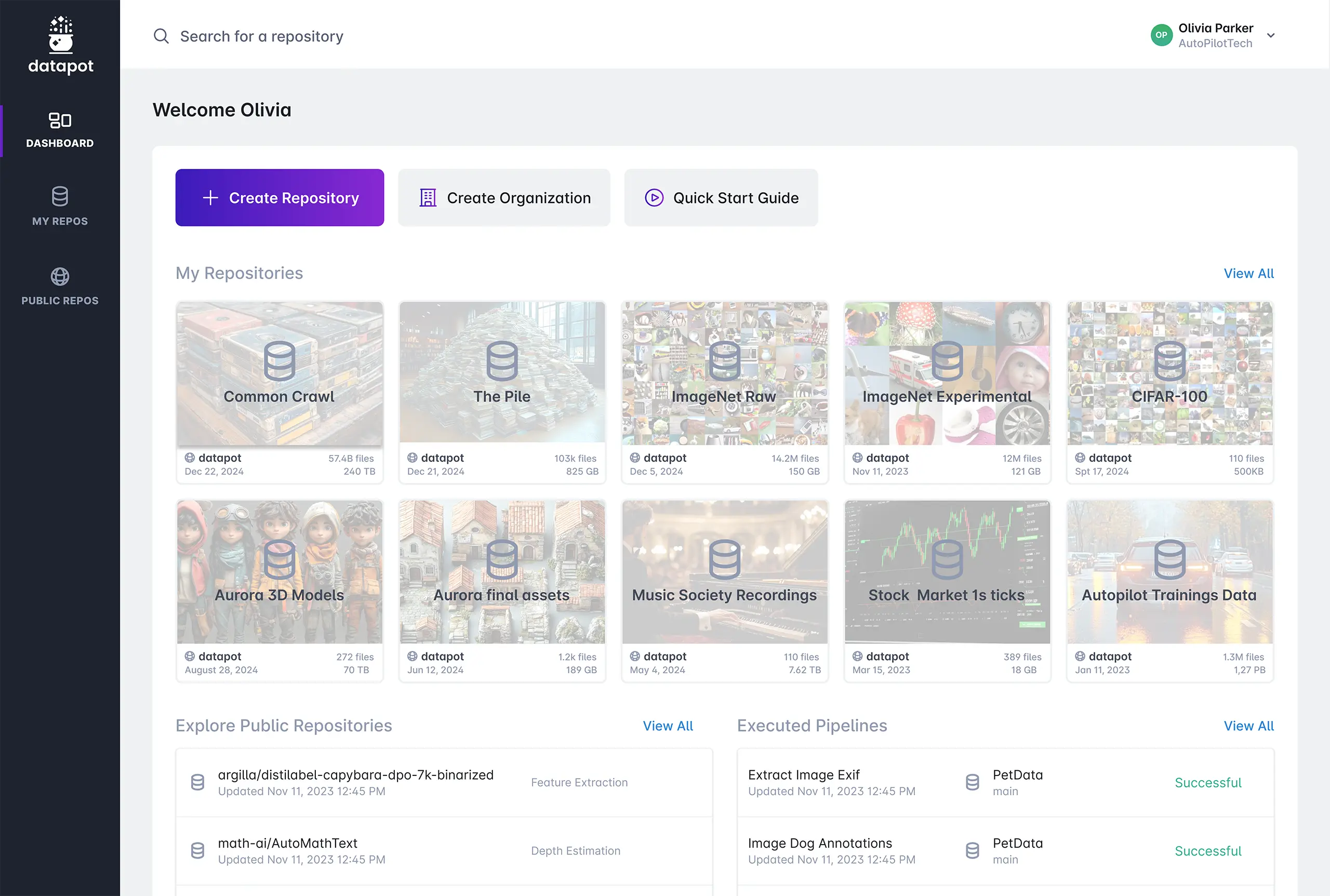

Version control for data at scale

Everything you need for data version control at scale on a single platform

Data Version Control

Datapot’s version control technology tracks and manages every change made to users’ data.

Data Pipelines

Datapot simplifies the data preparation process with its configurable data pipelines which automate and streamline workflows.

Data Storage

Data Transfer

Datapot Query Lanaguage (DQL)

Metadata Support

65%

time reduction in data preparation

27x

faster data distribution across clusters

80%

savings on data storage with data deduplication

Built for industries handling massive data

AI researchers and engineers face complexities of managing large datasets daily—from data collection to pre-processing for model training, these tasks consume valuable time and energy, slowing down innovation. Constant demands for rigorous versioning, consistent data processing, and streamlined collaboration add layers of complexity, often requiring complex custom scripts and specialized infrastructure that are both time-consuming to develop and maintain, and prone to error. As the data grows, these challenges multiply, leading to inefficiencies and bottlenecks in the research and development process.

In the game development industry, teams are often tasked with managing massive digital assets—such as 3D models, textures, and audio files—across hundreds of team members which can be a multi-dimensional puzzle. Ensuring that everyone is working with the correct versions of the data is crucial, but at the same time can be a logistical nightmare. The need to version, transform, and distribute large files frequently leads to inefficiencies, errors, and costly delays. Furthermore, distributing these assets across developer workstations can slow down the development process.

Managing the vast amounts of data generated in movie production and digital media projects is a constant challenge. High-definition video files, audio tracks, and visual effects elements can quickly accumulate into terabytes of data, making it difficult to keep track of versions, ensure consistency, and distribute assets across multiple editing or rendering workstations. Coordinating these tasks often requires dedicated IT staff and complex infrastructure, which can be both costly and time-consuming. The pressure to meet tight production deadlines only exacerbates these challenges, leading to potential delays and increased stress on the team.

Data management challenges are universal, but they manifest differently depending on the size of the organization. Startups often struggle with managing large repositories without the resources for dedicated IT staff or the budget for complex infrastructure. This can lead to disorganization, slow progress, and frequent errors as teams struggle to keep up with their data needs. On the other hand, large enterprises must scale their data management processes across multiple teams and departments, ensuring consistency, reliability, and efficiency at a massive scale. Both scenarios can result in wasted time, increased costs, and a diversion of focus from core business activities.

Having worked for decades on projects using extensive datasets, we experienced versioning chaos, waste of time, endless frustrations firsthand, and finally decided to do something about it. Even just to maintain our own sanity.

— Bernhard Glück, Datapot Founder

Store. Stir. Serve.

Ready to turn your data into magic with Datapot?

Product

Data Version Control

Data Pipelines

Data Transfer

Datapot Query Language (DQL)

Metadata Support

Company

About Us

Contact

©2025 AItive Data GmbH. All rights reserved.

©2025 AItive Data GmbH. All rights reserved.